Apache Kafka is an open-source distributed event streaming platform used by many companies for high-performance data pipelines, streaming analytics, data integration and mission-critical applications.

Quick overview of the core concepts of the Kafka architecture:

- Kafka is able to scale horizontally

- Kafka run as a cluster on one or more servers

- Kafka stores a stream of records in categories called topics

- Each record consists of a key, value and a timestamp

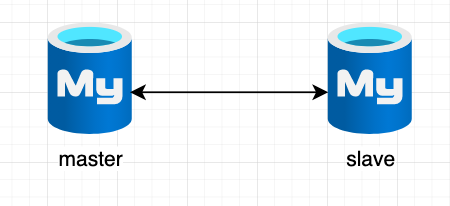

Just one use case

Tiny and powerless systems like system on a chip (SoC) can produce some important data and just stream them to the Kafka-Broker.

Without any application side logic for data storage, data replication and so on. Any Processing logic is covered by the Kafka broker itself, so the tiny systems can concentrate on the important tasks and sending the results to the Kafka broker.